To gain more insights about AI in combination with music I’ve chosen the act of Bozar which was marked at the Ars Electronica festival program with a star. „Live coding expert and drummer Dago Sondervan and multi-instrumentalist Andrew Claes team up for an experimental exploration of artificial intelligence in music performance. Armed with an arsenal of specifically developed tools and applications, the duo will train a virtual agent towards musical autonomy and realtime interaction, becoming a trio along the way“ (Palais des beaux-arts de Bruxelles – Musical creation and innovation with AI, 2020)”.

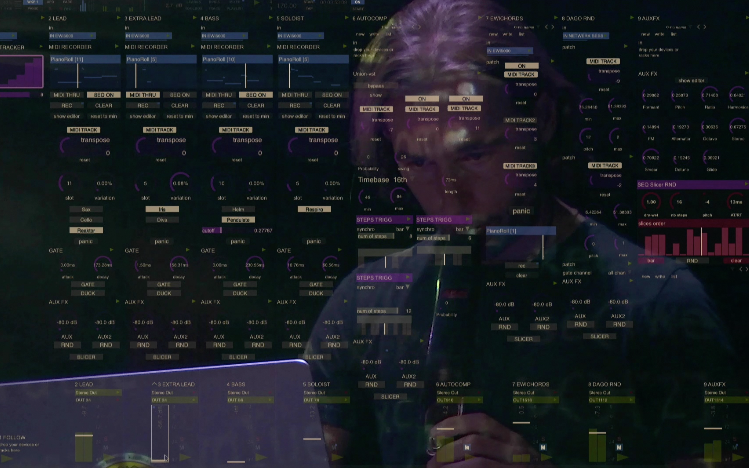

The mixture of human act and AI agents was very interesting to me, because I’ve never seen a performance like that. Starting with the concert, the music sounded very abstract and technical to me, which made it very futuristic. After that the Dago Sondermann, Andrew Claes and Dr. Frederik de Bläser gave an interview about their performance. To create the sounds the artists use a patching software where they record them and programs them within shifts of random notes – that’s the moment where AI comes to play. It gets input with images, shifting notes and messages, which triggers the AI to give outputs. So this is called a combination of generative and adaptive systems and AI. At this point Dr. Frederik de Bläser explains that AI is assisting them and learning from them as a creative partner. The role of the actor is to playing a piece of music and then the intelligent assistant adds its input which creates a feedback loop and kind of a dialog between the artist and the AI. It’s very special kind of music, which cannot be defined as melodically in my opinion because it has many layers of sound and it’s very textured, but the aim of the artists is also to make the music sound like machines. Also the more complex the AI becomes the less it is to control. At this point the whole thing becomes very discussable wether there’s a limit for AI or not. There was also a little discussion with the interviewer and the artists about this, which I found interesting because I’ve never thought about the role of AI in the music sector. But it could be a black box: surprising but also uncontrollable, because due to Bazar it’s sometimes completely wrong what AI understands. It has to have more and more information, but hard to train. You can give it more context but that makes it more difficult to handle.

In conclusion I can say that AI works good for live performances to show the functionality of it, even with the music sounds not melodic to me. I can imagine it to use it more commercial to make it more accessible to everyone, to create custom-made music and have a individual music experience like already mentioned in the interview. Although it can get hard to handle or expensive at the moment. But maybe this will change due to interests of some companies in the future, because AI is still associated as a scary and unfamiliar tool.

Sources:

Palais des beaux-arts de Bruxelles – Musical creation and innovation with AI. (2020, 13.September). Bot Bop: Musical creation and innovation with AI | Concert & Talk | BOZAR x Ars Electronica [Video]. YouTube. https://www.youtube.com/watch?v=8kOKv8DQ__U