M Eifler has created a project called Prosthetic Memory after a serious brain injury which resulted in her losing the capabilities of her memory. Her long term memory is dramatically lowered and can only hold on to little information. Instead of being able to remember important memories of her life, she can only remember simple information such as her phone number or how to ride a bike.

Prosthetic memory has 3 components that work together to assist her in having access to her memory through this digital assistance.

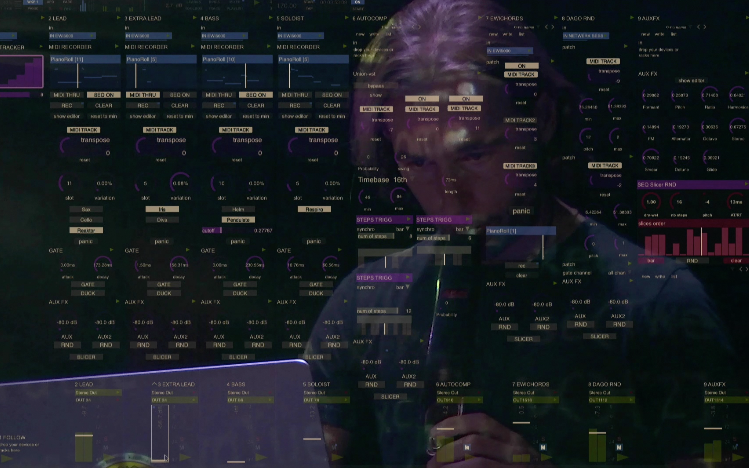

- A custom machine learning algorithm that acts as a bridge between the physical world she interacts with and the virtual memories that make up her prosthetic( additional) memory.

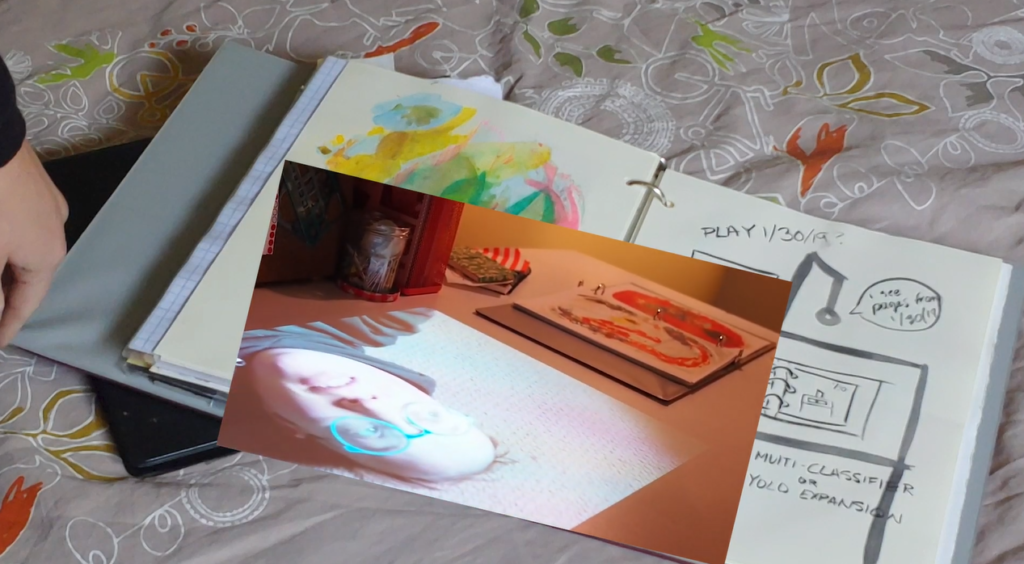

- Handmade paper journals that include drawings, collages and writings are digitally registered into a database and work as triggers for memories.

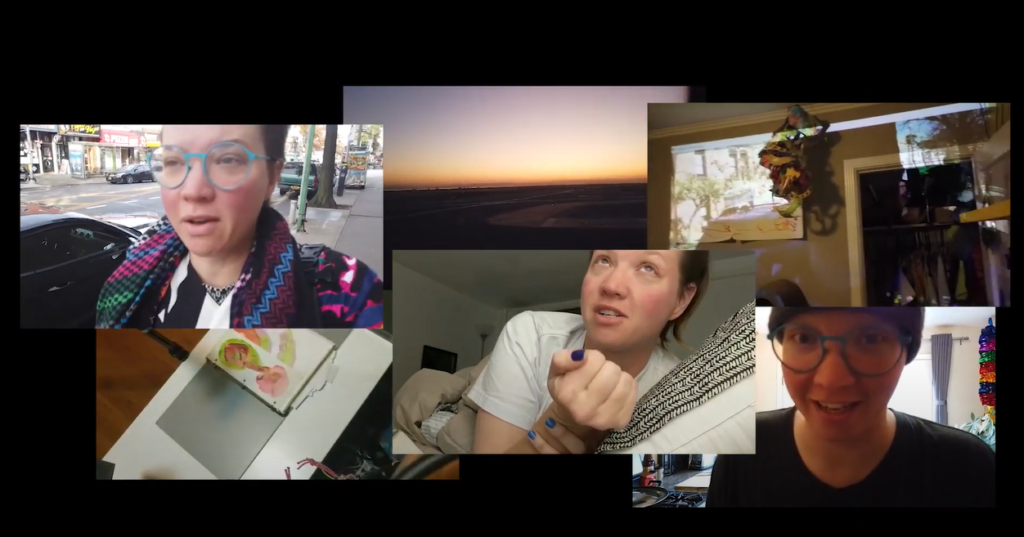

- Video documentation, similar to vlogging, that capture events, emotions, reactions and feelings that occur during her every day life.

When a picture from the paper journals is exposed to the AI’s camera, the network recognizes the visual trigger and gives her access to all the documented files of the day the paper artwork was created. There are cameras places all around her house that monitor her actions and can offer access to similar memories depending on the tasks she is performing. Objects and tools around her house are also tracked by the AI and she can revisit memories related to the object she is using.

I believe that Interaction design has a lot to offer to people with disabilities and use technology in the most efficient way possible to assist people with special needs. The project “Prosthetic Memory” is very inspiring and fuels a lot of creativity towards that area.