Titel: Trends und Anforderungen an Corporate Design im Kontext von E-Commerce – Entwicklung eines Corporate Design Manuals für den Onlineshop binfree

Verfasserin: Theresa Winds,

Universität: Masterstudiengang Informationsdesign und Medienmanagement an der Hochschule Merseburg

Jahr: 2018

Category: Interaction Design

Critical evaluation of a external master thesis

Title of work: Gamification as a marketing and customer loyalty tool

Type of work: Master thesis

Author: Sebastian Eschenbacher

Date & Place: Mittweida University, 27.12.2014

Course of studies: Industrial Management

Evaluation of an external master’s thesis

Werzowa Ludwig

Diploma Thesis, TU Wien. 26/1/2020

Notifications via vibrotactile signals.

Level of design

The structure of this Thesis, is build upon two pillars. First, the author presents the theoretical part and the research conducted on the topic. The second pillar consists of the practical application of this acquired knowledge into programming and building a wearable device. The two pillars of theoretical analysis and practical work, are presented in order, but in a later alternating fashion, since a research-test-evaluate-research approach was used in the project development.

Degree of innovation

The degree of innovation is satisfactory. The author is not inventing any new technology nor is he introducing a brand new approach to vibrotactile communication. Instead, he is taking a user centered approach to the generic vibration communication of devices that exist today, and builds upon that. He gets inspired from the dissatisfaction of notification systems on smart devices and utilizes vibration in a more well-designed and human-centered fashion. Generally, the user has to adjust to the vibration signals on devices, while the author successfully reverses this pathway and adjusts the vibration signals to fit the user needs.

Independence

The level of independence of this work is significant. Even though the research on vibration as a communication medium was done with the intention to replace auditory notifications for smartphones, the research findings and analysis on human interaction to vibration can be fruitful for many other areas where communication via vibrotactile (vibrotactile=vibration through touch) interfaces is appropriate.

Outline and structure

The presentation design of this research is identical to the author’s real journey towards completing this project. The order of research, work and evaluation presented in this thesis, corresponds with the same real order the author followed.

Degree of communication

Right off, after a short introduction about the topic of notifications and vibration as a communication medium, the author presents the questions that will be answered in the pages that will follow. I find this to be a good way of presenting research. The reader, by being presented with those research questions early on, gets a chance to relate to them and build up the desire to find out the answer to those questions.

Scope of work

The presentation of the technical work, which includes the coding part and the hardware assembling, were clear and intuitive. The code and the application structure were analyzed and explained. Additionally the full extension of the code was provided in the literature as a website link, so that the reader, if familiar with the scripting language used, could analyze further, without the author’s simplification and explanation.

Spelling

I did not notice significant spelling mistakes. The only thing regarding this area that became apparent to me, was the fact that the tone of language would change from time to time. From analytical formal speech, to colloquial tone.

Accuracy and precision

Regarding the qualitative part of the research, the author chose to not only present his findings and the conclusions drawn out of them, but also the actual questionnaire that he designed and used when conducting the research.

Literature

The portion of analog and digital resources of literature were somewhat balanced, even though online resources were favored. Regarding the nature of the project and the coding/hardware research required, I find it inevitable that weblinks would dominate the literature.

I was very happy to find and read this thesis because it provided me with more than just the mere input for a thesis construction analysis, but also gave me valuable data for my upcoming Thesis research topic. Coincidentally, I am also interested in developing a wearable device that will communicate with the user through vibrotactile signals. This thesis serves as a great foundation for me.

Masterarbeit – Lara, J.A.: POLKU. Designing an interactive sound installation based on soundscapes

In der hier vorgestellten Arbeit, POLKU, geht es um eine Klanginstallation, die versucht, finnische Soundscapes in einen anderen Ort zu transportieren, nämlich nach Japan. Das Exponat besteht aus einer Art „Pfad“, den UserInnen durchschreiten während sie von Lautsprechern flankiert werden und städtische und ländliche finnische Alltags-Klänge hören. Diese Soundscapes sind zufällig zusammengestellt und stellen im Grunde Collagen dar. Welche Klänge zusammen erklingen ist für jede(n) UserIn verschieden. Dies hängt von ihrem jeweiligen Körpergewicht ab. Die Sound-Engine ist nämlich mit Waagen verbunden, die sich auf dem Boden des Lautsprecher-Pfades befinden. Je nachdem wie schwer Personen sind, die über den Pfad gehen, erhalten diese eine unterschiedliche ID, die Einfluss auf die schrittweise Zusammenstellung der Klänge nimmt. So entsteht für jede(n) UserIn ein individuelles Erlebnis mit Soundscapes.

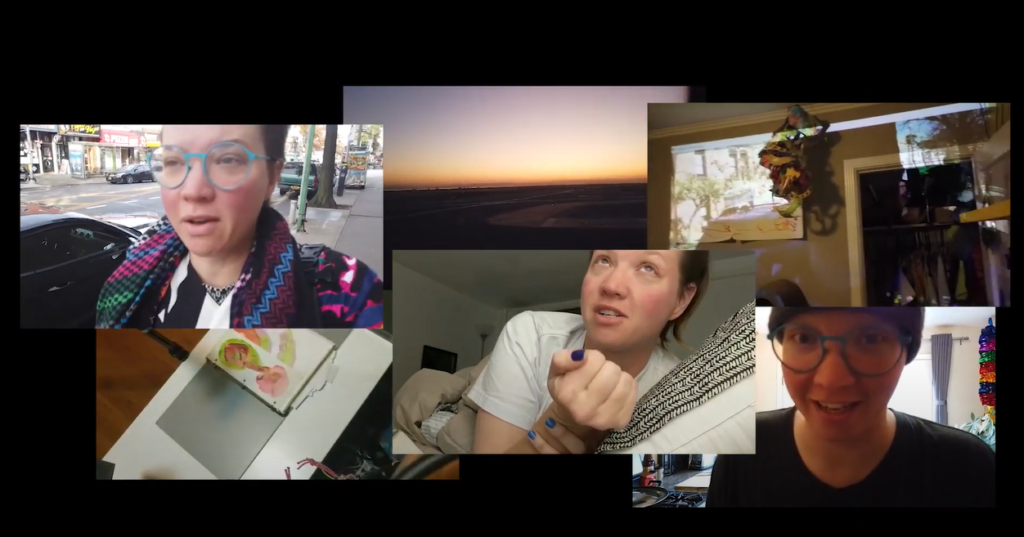

Prosthetic Memory

M Eifler has created a project called Prosthetic Memory after a serious brain injury which resulted in her losing the capabilities of her memory. Her long term memory is dramatically lowered and can only hold on to little information. Instead of being able to remember important memories of her life, she can only remember simple information such as her phone number or how to ride a bike.

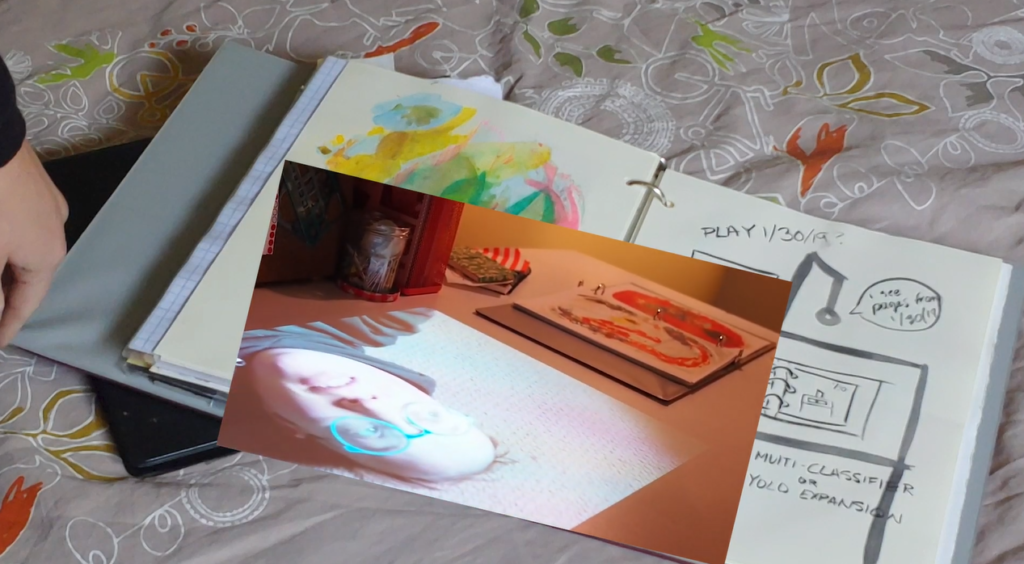

Prosthetic memory has 3 components that work together to assist her in having access to her memory through this digital assistance.

- A custom machine learning algorithm that acts as a bridge between the physical world she interacts with and the virtual memories that make up her prosthetic( additional) memory.

- Handmade paper journals that include drawings, collages and writings are digitally registered into a database and work as triggers for memories.

- Video documentation, similar to vlogging, that capture events, emotions, reactions and feelings that occur during her every day life.

When a picture from the paper journals is exposed to the AI’s camera, the network recognizes the visual trigger and gives her access to all the documented files of the day the paper artwork was created. There are cameras places all around her house that monitor her actions and can offer access to similar memories depending on the tasks she is performing. Objects and tools around her house are also tracked by the AI and she can revisit memories related to the object she is using.

I believe that Interaction design has a lot to offer to people with disabilities and use technology in the most efficient way possible to assist people with special needs. The project “Prosthetic Memory” is very inspiring and fuels a lot of creativity towards that area.

Digital Prayer

Kristina Tica draws attention to the form, limitations and advantages of a medium regarding artistic expression. The interaction between the artist and the medium that is being used for the production of an artifact is a retrograding process. The artist influences the medium and the medium influencing the artists in a constant and uninterrupted fashion. For this art installation, Kristina Tica used visual coding and AI neural networks. She emphasized on the interest that arises from trying to get behind the invisible mechanism on which the user interface of a medium influences the interaction between the artist and the artwork. With this state of mind, she experiments with the boundaries and limitation of visual programming in an attempt to discover its boundaries.

Digital Prayer

Her team created an AI neural network that collected and analyzed more than 40 thousand pictures of traditional Christian Orthodox iconography and proceeded to generate religious depictions autonomously. Kristina believes that the chaotic world of numbers in a code and all the uncountable calculations done by the AI neural network take substance in the form of those pictures. In the same way a picture communicates silently many unspoken words, the neural network communicates with pictures all the invisible numbers that stand behind it. It is worth to be mentioned, that during the presentation of the installation in physical space, both the generated artifacts and the code behind each of them will be exposed to the audience.

In my opinion, and based on my cultural background, unifying religious artifacts with artificial intelligence is simultaneously fascinating and highly controversial.

As an individual I was caught off guard when I stumbled upon this project because it made me realize that religion is one aspect of life that has yet not been subjected to any substantial forms of digitalization. We live in a world where many of the aspects of our lives are heavily influenced by technology and digitalization and humanity is in the process of actively pursuing to digitalize even more areas of life. The matter of faith and religion proposes a huge topic of analysis and a challenging task when it becomes subjective to AI and technology.

As an interaction designer it is challenging thinking of the parameters and the approaches that should be considered, or even allowed, when attempting to build an effective hypothetical digitalized interaction between humans and their faith.

AI x Ecology

The panel AI x Ecology deals with the question how technology can influence our environment and ecology. I have often asked myself how sustainable technology, internet use and especially streaming is and how we can use technology to live more sustainable. In her talk „Computational Sustainability: Computing for a Better World and a Sustainable Future“ Carla P. Gomes gives an insight into her work and explains what sustainable development means.

Performance and Interaction

Animate Architecture • Build Installations • Collaborate With Performers & Machines • Code With Interfaces • Create Sonic Environments • Choreograph Light • Use Advanced Manufacturing • Reimagine Robots • Augment Bodies • Construct Virtual Realities – these are not just simple buzzwords, but much more enables us to consider space, context, systems, objects and people as potential performers to recognize the wide scope for creativity.

The Interactive Architecture Lab based at the Bartlett School of Architecture, University College London deals with exactly these topics and runs a Masters Programme in design for performance and interaction. Dr. Ruairi Glynn, the Director of this Lab, is trying to break the boundaries and to develop new kinds of practices within this Programme. The core of the Master is “the belief that the creation of spaces for performance and the creation of performance within them are symbiotic design activities”. He sees performance in interaction as a holistic design practice and gave a wide range of (student) projects at the Ars Eletronica 2020, which can also be seen as an impact and inspiration for today’s and future’s development of interaction design.

Gustav Klimt – “The Kiss” as Gigapixel

The painting “The Kiss” is not only one of the most famous paintings in Austria, but also an iconic work of art history. Even before the painter finished its masterpiece, it was purchased by the Austrian state and has been in the Belvedere Museum in Vienna ever since. Every year thousands of visitors come to see the work of art.

u19 – create your world 2020

Creating your World to Change our Perspektive – Der Augenblick davor. Damit beschäftigte sich das diesjährige Ars Eletronica für alle u19 Teilnehmer. Bei diesem Vortrag wurden die GewinnerInnen vorgestellt, die im Folgendem aufgeführt sind. Sehr spannend ist dabei zu beobachten, dass sich viele Werke mit Themen beschäftigen, die charakteristische Züge zu der derzeitigen Covid-19 Situation aufzeigen, obwohl die Einreichung der Projekte vor der Pandemie stattgefunden hat.