On this blog entry, I’ve taken a look at the paper from Josh Urban Davis of the Department of Computer Science in the Dartmouth College.

http://www.nime.org/proceedings/2019/nime2019_paper088.pdf

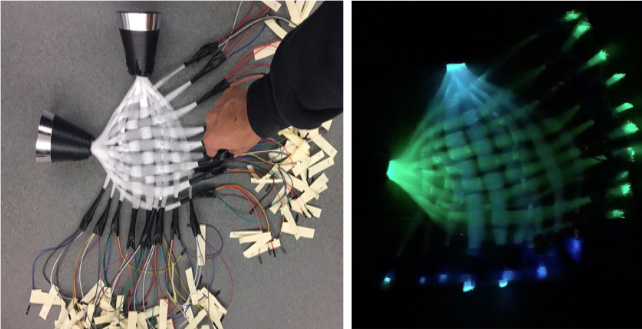

The IllumiWear is eTextile prototype that uses fiber optics as interactive input and visual output. Fibre optic cables are combined into bundles and the woven to create a bendable glowing fabric. By connecting light diodes to one side of the fibre optic cables and light sensors to the other side, loss of light intensity can be measured when the fabric is bent.

By this technology the IllumiWear is able to recognise the difference between a touch, slight bends, harsh bends and can also recover the exact location of these deformations.

MAPPING FOR MULTIMEDIA INTERACTIONS

On the final prototype they were able to include 5 modes of interaction that are possible with the Illumiwear. Each of this gestures triggers or modulates an OSC(Open Sound Protocoll) sample. Then they used this data in Ableton Live for sound generation and midi-mapping.

The 5 modes of Interaction are :

- location based touch input similar to a keyboard

- pressure sensitive touch input

- sliding gesture

- bending tangible input

- tangible deformation

- color variation-based input

CONCLUSION

I really like this idea as it shows a new way of interacting with a computer. In my opinion it looks really cool and also sounds very interesting.