For this blog entry I decided to read the paper „Border: A Live Performance Based on Web AR and a Gesture-Controlled Virtual Instrument“ by K.Nishida et al.

https://www.nime.org/proceedings/2019/nime2019_paper009.pdf

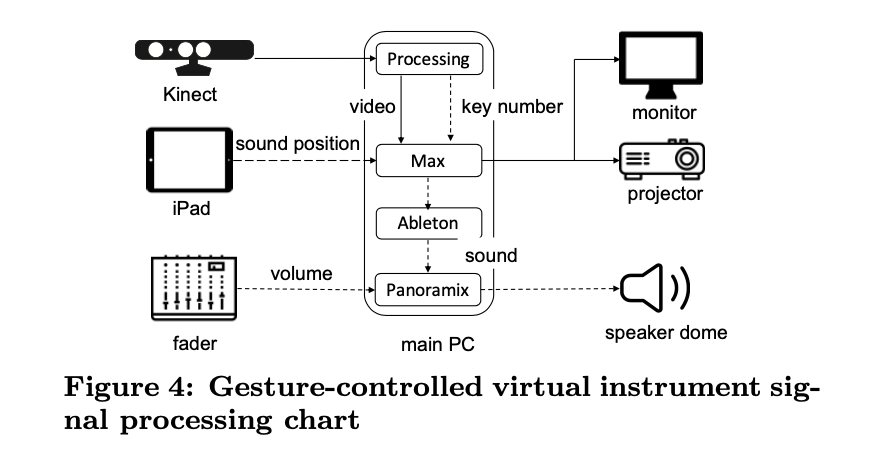

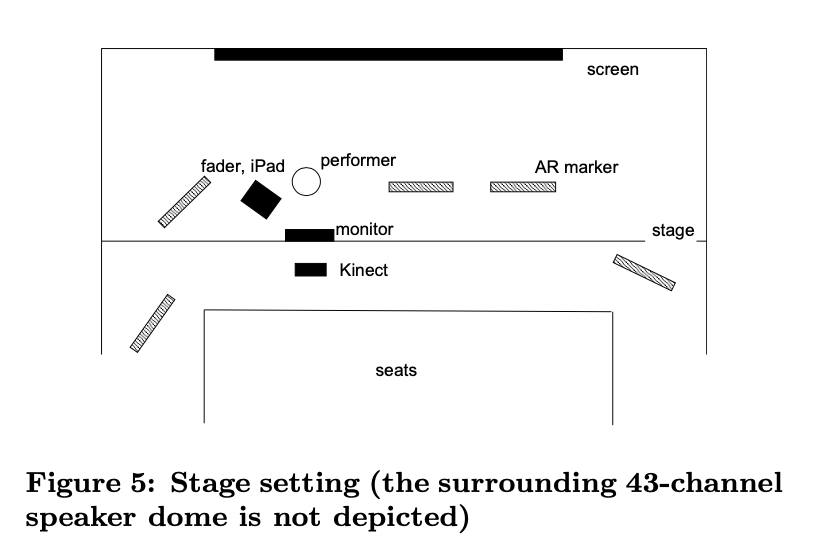

I chose this paper because I personally really enjoy going to concerts and live performances and the idea of having the audience interact with the performer immediately caught my interest. For this project an AR Web Application was developed. Web – because every participant can easily access it without downloading anything. „Border“ allows performers to record their movement, which is repeated/played in the AR environment afterward. In order to control the sound gesture tracking by the Kinect was mainly used. The performer is able to switch between selection mode, where a virtual instrument can be chosen and play mode. The audience had the possibility to access that videos via the web application at five AR markers on stage – big enough (1m x 1m) so even people in the back can access them .